Have you ever wondered how to make smarter decisions with the data you have? Whether you’re diving into machine learning, data analysis, or just curious about how information works, understanding Information Gain can change the way you see the world.

It’s a powerful concept that helps you find the most valuable clues in a sea of data. By mastering Information Gain, you’ll unlock a simple yet effective tool to improve your predictions and choices. Ready to discover how this idea can sharpen your insights and boost your results?

Keep reading, and you’ll see why Information Gain matters for you.

Credit: en.wikipedia.org

Information Gain Basics

Information Gain is a key idea in data science. It helps us choose the best feature to split data. This makes models more accurate and easier to understand. Understanding the basics of Information Gain is important for learning many machine learning methods.

Concept And Importance

Information Gain measures how much a feature improves data clarity. It shows how well a feature separates data into classes. Higher Information Gain means better separation. This helps models make clearer decisions. It guides algorithms to pick features that matter most.

Relation To Entropy

Entropy measures disorder or uncertainty in data. High entropy means data is mixed and unclear. Information Gain calculates how much entropy decreases after splitting data by a feature. The goal is to lower entropy and increase order. This makes data easier to classify and predict.

Role In Decision Trees

Decision trees use Information Gain to choose splitting points. Each node splits data based on the feature with highest Information Gain. This creates branches that group similar data. It helps build simple and accurate trees. The process repeats until data groups are pure or small enough.

Credit: medium.datadriveninvestor.com

Calculating Information Gain

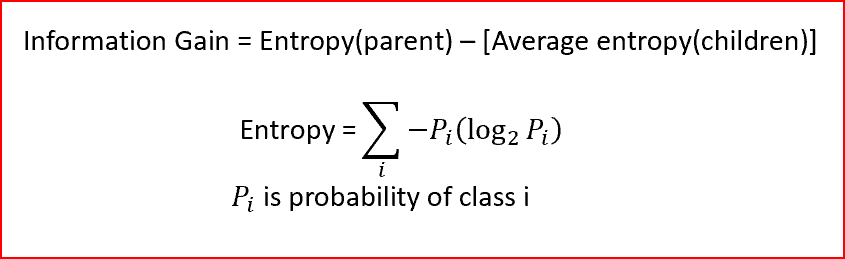

Calculating Information Gain helps measure how well a feature splits data in decision trees. It shows the reduction in uncertainty after the split. This calculation guides choosing the best attribute for splitting data.

The process involves comparing entropy before and after the split. Entropy measures disorder or impurity in the dataset. Lower entropy means more order and better classification.

Step-by-step Process

First, calculate the entropy of the whole dataset. Use the formula: entropy = -∑ p(x) log₂ p(x). Here, p(x) is the probability of each class.

Next, split the data based on a feature’s values. Calculate entropy for each split group.

Find the weighted average entropy of these groups. Weight depends on the group size relative to the whole.

Finally, subtract the weighted entropy from the original entropy. The result is the information gain.

Examples With Sample Data

Imagine a dataset with 10 samples: 6 are positive, 4 negative. Original entropy is calculated first.

Split samples by a feature: 4 positives and 1 negative in group A, 2 positives and 3 negatives in group B.

Calculate entropy for group A and group B separately. Then find weighted average entropy.

Subtract weighted entropy from original entropy. The difference is the information gain for that feature.

Common Pitfalls

Ignoring the size of split groups can mislead results. Small groups might show low entropy but lack significance.

Calculating entropy incorrectly, especially log base, leads to wrong values. Always use log base 2.

For continuous features, improper binning affects accuracy. Choose bins carefully for meaningful splits.

Applications In Data Science

Information Gain is a key concept in data science. It helps measure how much information a feature gives about the target variable. This measure guides many processes in data analysis and machine learning. Its use improves the quality and effectiveness of models.

Data scientists use Information Gain to select important features. It also helps enhance model accuracy. It plays a big role in classification tasks. Each of these areas benefits from clearer, better data insights.

Feature Selection

Information Gain helps pick the best features for a model. It ranks features by how much they reduce uncertainty. Features with high Information Gain give more useful data. This process removes noisy or irrelevant features. The result is a simpler, faster, and more accurate model.

Improving Model Accuracy

Using Information Gain improves the model’s predictions. It focuses the model on the most informative data. This reduces errors and boosts confidence in results. Models become more reliable with clearer decision rules. Accuracy gains come from better data understanding.

Use In Classification Tasks

Information Gain is vital in building classification trees. It helps decide which feature splits the data best. Each split creates purer groups of data points. This process repeats until the model classifies well. It ensures the model learns patterns that matter most.

Credit: www.wikiwand.com

Advantages And Limitations

Information Gain is a popular tool in data analysis. It helps measure how much a feature reduces uncertainty. This section explores its advantages and limitations. Understanding these points helps decide when to use it best.

Strengths In Data Analysis

Information Gain clearly shows which features matter most. It ranks features by how much they reduce disorder. This makes it easy to pick important data points. It works well with decision trees and classification tasks. It handles categorical data naturally. It also helps create simpler, more accurate models.

Challenges And Biases

Information Gain can favor features with many values. This bias might lead to overfitting. It struggles with continuous data unless properly handled. Noise in data can affect its accuracy. It assumes all features are independent. This assumption is not always true in real data.

Comparisons With Other Metrics

Information Gain is often compared to Gain Ratio and Gini Index. Gain Ratio tries to fix Information Gain’s bias for many values. Gini Index measures data impurity differently but aims for similar goals. Each metric has strengths in different situations. Choosing the right metric depends on the data and task.

Enhancing Decision-making

Enhancing decision-making is vital for any business or project. Information Gain helps leaders pick the best choices. It shows which data points matter most. This clarity improves how decisions are formed. Teams act faster with clear, useful insights.

Using Information Gain, decisions become more accurate. It reduces guesswork and confusion. Businesses save time and money by focusing on key data. This method supports strong strategies and better results.

Translating Data Insights

Data alone can be confusing. Information Gain turns raw numbers into clear answers. It highlights important facts that guide actions. Teams understand what to focus on and why. This makes data easy to use in daily work. Clear insights lead to confident decisions.

Case Studies In Business

Many companies use Information Gain to improve results. A retail chain used it to find top-selling products. They adjusted stock based on those insights. Sales went up and waste went down. Another firm used it to spot customer trends. They created offers that matched client needs. This led to happier customers and more profits.

Future Trends

Information Gain will grow in importance with big data. Machine learning will make it faster and smarter. More industries will use it to improve choices. Tools will become easier for everyone to use. This means smarter decisions across all fields.

Frequently Asked Questions

What Is Information Gain In Machine Learning?

Information gain measures how well a feature splits data into classes. It helps select the most informative attribute during decision tree construction. Higher information gain means better feature relevance and improved model accuracy.

How Is Information Gain Calculated?

Information gain is calculated by subtracting the weighted entropy of child nodes from the parent node’s entropy. This quantifies the reduction in uncertainty after a data split.

Why Is Information Gain Important For Decision Trees?

Information gain guides the selection of features that best separate data classes. It helps build efficient, accurate decision trees by choosing splits that maximize information gain.

What Role Does Entropy Play In Information Gain?

Entropy measures data impurity or disorder. Information gain uses entropy to quantify how much a split reduces uncertainty in target classification.

Conclusion

Information gain helps you find the best features in data. It measures how much a feature reduces uncertainty. Using information gain makes decision trees more accurate. It guides choices in machine learning and data analysis. Understanding this concept improves how you work with data.

Keep practicing to see its benefits clearly. Small steps lead to better results over time. Information gain is a useful tool for clearer insights. Try applying it in your next project. You will notice better decisions and smarter models.